Fundamentals

These papers address more fundamental problems of view-synthesis with NeRF methods.Grid-guided Neural Radiance Fields for Large Urban Scenes

Linning Xu, Yuanbo Xiangli, Sida Peng, Xingang Pan, Nanxuan Zhao, Christian Theobalt, Bo Dai, Dahua Lin Figure 1. We perform large urban scene rendering with a novel grid-guided neural radiance fields. An

example of our target large urban scene is shown on the left, which spans over 2.7km2 ground areas

captured by over 5k drone images. We show that the rendering results from NeRF-based methods tend to

display noisy artifacts when adapting to large-scale scenes with high-resolution feature grids. Our

proposed two-branch model combines the merits from both approaches and achieves photorealistic novel

view renderings with remarkable improvements over existing methods. Both two branches gain significant

enhancements over their individual baselines. (Project page: https://city-super.github.io/gridnerf)

Figure 1. We perform large urban scene rendering with a novel grid-guided neural radiance fields. An

example of our target large urban scene is shown on the left, which spans over 2.7km2 ground areas

captured by over 5k drone images. We show that the rendering results from NeRF-based methods tend to

display noisy artifacts when adapting to large-scale scenes with high-resolution feature grids. Our

proposed two-branch model combines the merits from both approaches and achieves photorealistic novel

view renderings with remarkable improvements over existing methods. Both two branches gain significant

enhancements over their individual baselines. (Project page: https://city-super.github.io/gridnerf)

The authors propose a compact multiresolution ground feature plane representation combined with a

positional encoding-based NeRF branch to handle scenes with complex geometry and texture. This integration

allows for efficient rendering of photorealistic novel views with fine details.

Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos

Liao Wang, Qiang Hu, Qihan He, Ziyu Wang, Jingyi Yu, Tinne Tuytelaars, Lan Xu, Minye Wu Figure 1. Our proposed ReRF utilizes a residual radiance field and a global MLP to enable highly

compressible and streamable radiance field modeling. Our ReRF-based codec scheme and streaming player

gives users a rich interactive experience.

Figure 1. Our proposed ReRF utilizes a residual radiance field and a global MLP to enable highly

compressible and streamable radiance field modeling. Our ReRF-based codec scheme and streaming player

gives users a rich interactive experience.

ReRF extends NeRF to handle long-duration dynamic scenes in real-time by modeling residuals between

adjacent timestamps in a spatial-temporal feature space with a global coordinate-based MLP feature

decoder. The method uses a compact motion grid and employs a sequential training scheme. ReRF enables

online streaming of FVVs of dynamic scenes.

SteerNeRF: Accelerating NeRF Rendering via Smooth Viewpoint Trajectory

Sicheng Li, Hao Li, Yue Wang, Yiyi Liao, Lu Yu Figure 1. Illustration. We exploit smooth viewpoint trajectory to accelerate NeRF rendering, achieved by

performing volume rendering at a low resolution and recovering the target image guided by multiple

viewpoints. Our method enables fast rendering with a low memory footprint.

Figure 1. Illustration. We exploit smooth viewpoint trajectory to accelerate NeRF rendering, achieved by

performing volume rendering at a low resolution and recovering the target image guided by multiple

viewpoints. Our method enables fast rendering with a low memory footprint.

NeRF-MVS improves the efficiency of NeRF rendering by keyframe prior knowledge, allowing the information

of earlier rendered images to influence later ones. Moreover, the use of a 2D renderer decreases memory

consumption and overhead.

Compressing Volumetric Radiance Fields to 1 MB

Lingzhi Li, Zhen Shen, Zhongshu Wang, Li Shen, Liefeng Bo Figure 1. Our compression pipeline realizes 100X compression rate while maintaining the rendering

quality of the original volumetric model.

Figure 1. Our compression pipeline realizes 100X compression rate while maintaining the rendering

quality of the original volumetric model.

The VQRF framework compresses volume-grid-based radiance fields to overcome their memory and disk space

issues. The framework includes voxel pruning, a trainable vector quantization, and an efficient joint

tuning strategy, which achieves high generalization with negligible loss of visual quality.

DINER: Disorder-Invariant Implicit Neural Representation

Shaowen Xie, Hao Zhu, Zhen Liu, Qi Zhang, You Zhou, Xun Cao, Zhan Ma Figure 1. PSNR of various INRs on 2D image fitting over different training epochs.

Figure 1. PSNR of various INRs on 2D image fitting over different training epochs.

DINER solves the spectral bias in implicit neural representation by rearranging input coordinates to

enable improved mapping of the signal to a more uniform distribution. The method demonstrated improved

reconstruction quality and speed when compared with other state-of-the-art algorithms.

FreeNeRF: Improving Few-shot Neural Rendering with Free Frequency Regularization

Jiawei Yang, Marco Pavone, Yue Wang Figure 3. Illustration of occlusion regularization. We show 3 training views (solid rectangles) and 2

novel views (dashed rectangles) rendered by a frequency-regularized NeRF. The floaters in the novel views

appear to be near-camera dense fields in the training views (dashed circles) so that we can penalize them

directly without the need for the costly novel-view rendering in .

Figure 3. Illustration of occlusion regularization. We show 3 training views (solid rectangles) and 2

novel views (dashed rectangles) rendered by a frequency-regularized NeRF. The floaters in the novel views

appear to be near-camera dense fields in the training views (dashed circles) so that we can penalize them

directly without the need for the costly novel-view rendering in .

FreeNeRF is a simple baseline that surpasses previous state-of-the-art methods in neural view synthesis

performance with sparse inputs. This is achieved by introducing two regularization terms at no additional

computational cost, aimed at controlling frequency range inputs and penalizing near-camera density fields.

The proposed technique presents an effective approach for low-data regimes of neural radiance fields

training.

Learning Neural Duplex Radiance Fields for Real-Time View Synthesis

Ziyu Wan, Christian Richardt, Aljaž Božič, Chao Li, Vijay Rengarajan, Seonghyeon Nam, Xiaoyu Xiang, Tuotuo Li, Bo Zhu, Rakesh Ranjan, Jing Liao Figure 1. Our framework efficiently learns the neural duplex radiance field from a NeRF model for

high-quality real-time view synthesis.

Figure 1. Our framework efficiently learns the neural duplex radiance field from a NeRF model for

high-quality real-time view synthesis.

NeRF to Mesh (N2M) converts Nerf models to mesh for real-time rendering. The representation uses a duplex

mesh and screen-space convolution for fast rendering. Furthermore, a multi-view distillation optimization

strategy is used to improve performance.

Neuralangelo: High-Fidelity Neural Surface Reconstruction

Zhaoshuo Li, Thomas Müller, Alex Evans, Russell H. Taylor, Mathias Unberath, Ming-Yu Liu, Chen-Hsuan Lin Figure 1. We present Neuralangelo, a framework for high-fidelity 3D surface reconstruction from RGB

images using neural volume rendering, even without auxiliary data such as segmentation or depth. Shown

in the figure is an extracted 3D mesh of a courthouse.

Figure 1. We present Neuralangelo, a framework for high-fidelity 3D surface reconstruction from RGB

images using neural volume rendering, even without auxiliary data such as segmentation or depth. Shown

in the figure is an extracted 3D mesh of a courthouse.

Neuralangelo synthesizes high-quality 3D models from images by first learning a multi-resolution implicit

function representative of a geometry structure, then using a smoothed representation to improve the

optimization of the hash grid.

PermutoSDF: Fast Multi-View Reconstruction with Implicit Surfaces using Permutohedral Lattices

Radu Alexandru Rosu, Sven Behnke Figure 1. Given multi-view images, we recover both high quality geometry as an implicit SDF and

appearance which can be rendered in real-time.

Figure 1. Given multi-view images, we recover both high quality geometry as an implicit SDF and

appearance which can be rendered in real-time.

PermutoSDF proposes a permutohedral lattice implicit surface representation that can recover

high-frequency geometric details on arbitrary mesh at a lower cost than voxel hashing. The underlying

approach uses hybrid density and SDF optimization for high-quality surface reconstruction from RGB images.

Multi-Space Neural Radiance Fields

Ze-Xin Yin, Jiaxiong Qiu, Ming-Ming Cheng, Bo Ren Figure 1. (a) Though Mip-NeRF 360 can handle unbounded scenes, it still suffers from reflective

surfaces, as the virtual images violate the multi-view consistency, which is of vital importance to

NeRF-based methods. (b) Our method can help conventional NeRF-like methods learn the virtual images with

little extra cost.

Figure 1. (a) Though Mip-NeRF 360 can handle unbounded scenes, it still suffers from reflective

surfaces, as the virtual images violate the multi-view consistency, which is of vital importance to

NeRF-based methods. (b) Our method can help conventional NeRF-like methods learn the virtual images with

little extra cost.

MS-NeRF proposes a multi-space approach to address the problem of blurry or distorted renderings caused by

reflective objects in existing NeRF methods. By using feature fields in parallel subspaces, the MS-NeRF

shows better performance with minimal computational overheads needed for training and inferring.

NeRFLight: Fast and Light Neural Radiance Fields using a Shared Feature Grid

Fernando Rivas-Manzaneque, Jorge Sierra-Acosta, Adrian Penate-Sanchez, Francesc Moreno-Noguer, Angela Ribeiro Figure 1. NeRFLight is able to double the FPS/MB ratio of the second best method while obtaining similar

quality metrics to state-of-the-art.

Figure 1. NeRFLight is able to double the FPS/MB ratio of the second best method while obtaining similar

quality metrics to state-of-the-art.

The authors introduce a lightweight neural radiance field, called SplitNeRF, which separates the density

field into regions. A different decoder is employed to model the density field of each region,

simultaneously using the same feature grid. This new architecture produces a smaller but more compact

representation than existing methods.

Cross-Guided Optimization of Radiance Fields with Multi-View Image Super-Resolution for High-Resolution Novel View Synthesis

Youngho Yoon, Kuk-Jin Yoon Figure 1. Cross-guided optimization between single image superresolution and radiance fields. They

complement weaknesses of one another with their respective strengths by using the SR update module,

rendered train-view RGBs, and uncertainty maps.

Figure 1. Cross-guided optimization between single image superresolution and radiance fields. They

complement weaknesses of one another with their respective strengths by using the SR update module,

rendered train-view RGBs, and uncertainty maps.

The proposed method uses a cross-guided optimization framework that performs multi-view image

super-resolution (MVSR) on train-view images during the optimization process of radiance fields. This

iterative process improves multi-view consistency and high-frequency details, which ultimately leads to

better performance in high-resolution novel view synthesis. The method outperforms existing methods on

various benchmark datasets.

HelixSurf: A Robust and Efficient Neural Implicit Surface Learning of Indoor Scenes with Iterative Intertwined Regularization

Zhihao Liang, Zhangjin Huang, Changxing Ding, Kui Jia Figure 2. Overview of HelixSurf: Helix-shaped neural implicit Surface learning. HelixSurf integrates the

neural implicit surface learning (cf. Section 4.1) and PatchMatch based MVS (cf. Section 4.2) in a

robust and efficient manner. We optimize HelixSurf with an iterative intertwined regularization, which

uses the intermediate prediction from one strategy as guidance to regularize the learning/optimization

of the other one; given that MVS predictions are less reliable for textureless surface areas, we

additionally devise a scheme that regularizes the learning on such areas by leveraging the homogeneity

per superpixel in observed multi-view images (cf. Section 4.1.1). We also propose a scheme for point

sampling along rays (cf. Section 4.3), which significantly improves the efficiency. At the inference stage

of HelixSurf, we conduct grid sampling to query the learned SDF values at sampled points and run

Marching Cubes to get the reconstruction results.

Figure 2. Overview of HelixSurf: Helix-shaped neural implicit Surface learning. HelixSurf integrates the

neural implicit surface learning (cf. Section 4.1) and PatchMatch based MVS (cf. Section 4.2) in a

robust and efficient manner. We optimize HelixSurf with an iterative intertwined regularization, which

uses the intermediate prediction from one strategy as guidance to regularize the learning/optimization

of the other one; given that MVS predictions are less reliable for textureless surface areas, we

additionally devise a scheme that regularizes the learning on such areas by leveraging the homogeneity

per superpixel in observed multi-view images (cf. Section 4.1.1). We also propose a scheme for point

sampling along rays (cf. Section 4.3), which significantly improves the efficiency. At the inference stage

of HelixSurf, we conduct grid sampling to query the learned SDF values at sampled points and run

Marching Cubes to get the reconstruction results.

HelixSurf is proposed and combines traditional multi-view stereo with neural implicit surface learning for

reconstruction of complex scene surfaces using complementary benefits from the two methods. The authors

show favorable results with orders of magnitude faster computation times, even without relying on

auxiliary training data.

Neural Fourier Filter Bank

Zhijie Wu, Yuhe Jin, Kwang Moo Yi Figure 1. Teaser – We propose neural Fourier filter bank to perform spatial and frequency-wise

decomposition jointly, inspired by wavelets. Our method provides significantly improved reconstruction

quality given the same computation and storage budget, as represented by the PSNR curve and the error

image overlay. Relying only on space partitioning without frequency resolution (InstantNGP) or frequency

encodings without space resolution (SIREN) provides suboptimal performance and convergence. Simply

considering both (ModSine) enhances scalability when applied to larger scenes, but not in terms of

quality and convergence.

Figure 1. Teaser – We propose neural Fourier filter bank to perform spatial and frequency-wise

decomposition jointly, inspired by wavelets. Our method provides significantly improved reconstruction

quality given the same computation and storage budget, as represented by the PSNR curve and the error

image overlay. Relying only on space partitioning without frequency resolution (InstantNGP) or frequency

encodings without space resolution (SIREN) provides suboptimal performance and convergence. Simply

considering both (ModSine) enhances scalability when applied to larger scenes, but not in terms of

quality and convergence.

NFFB is a Fourier decomposition inspired neural field that separates scene signals into spatial and

frequency components. It outperforms other state-of-the-art models using this paradigm in terms of model

compactness and convergence speed across 2D image fitting, 3D shape reconstruction and neural radiance

fields.

Progressively Optimized Local Radiance Fields for Robust View Synthesis

Andreas Meuleman, Yu-Lun Liu, Chen Gao, Jia-Bin Huang, Changil Kim, Min H. Kim, Johannes Kopf Figure 2. Space parameterization. (a) NDC, used by NeRF for forward-facing scenes, maps a frustum to a

unit cube volume. While a sensible approach for forward-facing cameras, it is only able to represent a

small portion of a scene as the frustum cannot be extended beyond a field of view of 120◦ or so without

significant distortion. (b) Mip-NeRF360’s space contraction squeezes the background and fits the entire

space into a sphere of radius 2. It is designed for inward-facing 360 scenes and cannot scale to long

trajectories. (c) Our approach allocates several radiance fields along the camera trajectory. Each

radiance field maps the entire space to a cube (Equation (5)) and, each having its own center for

contraction (Equatione (7)), the high-resolution uncontracted space follows the camera trajectory and

our approach can adapt to any camera path.

Figure 2. Space parameterization. (a) NDC, used by NeRF for forward-facing scenes, maps a frustum to a

unit cube volume. While a sensible approach for forward-facing cameras, it is only able to represent a

small portion of a scene as the frustum cannot be extended beyond a field of view of 120◦ or so without

significant distortion. (b) Mip-NeRF360’s space contraction squeezes the background and fits the entire

space into a sphere of radius 2. It is designed for inward-facing 360 scenes and cannot scale to long

trajectories. (c) Our approach allocates several radiance fields along the camera trajectory. Each

radiance field maps the entire space to a cube (Equation (5)) and, each having its own center for

contraction (Equatione (7)), the high-resolution uncontracted space follows the camera trajectory and

our approach can adapt to any camera path.

The authors present an algorithm for reconstructing the radiance field of a large-scale scene from a

single casually captured video. To address the size of the scene and difficulty of camera pose estimation,

the method uses local radiance fields trained with frames within a temporal window in a progressive

manner. The method is shown to be more robust than existing approaches and performs well even under

moderate pose drifts.

MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures

Zhiqin Chen, Thomas Funkhouser, Peter Hedman, Andrea Tagliasacchi Figure 1. Teaser – We present a NeRF that can run on a variety of common devices at interactive frame

rates.

Figure 1. Teaser – We present a NeRF that can run on a variety of common devices at interactive frame

rates.

Poly-NeRF introduces a new representation for NeRFs that relies on standard hardware rendering instead of

its traditional algorithms for fast NeRF representation.

TMO: Textured Mesh Acquisition of Objects with a Mobile Device by using Differentiable Rendering

Jaehoon Choi, Dongki Jung, Taejae Lee, Sangwook Kim, Youngdong Jung, Dinesh Manocha, Donghwan Lee Figure 1. Example reconstruction results collected from a smartphone in the wild. (a) Data acquisition

setup. (b) Images captured from a smartphone. (c) A reconstructed mesh. (d) A novel view of textured

mesh. Our proposed method can reconstruct the highquality geometric mesh with a visually realistic

texture.

Figure 1. Example reconstruction results collected from a smartphone in the wild. (a) Data acquisition

setup. (b) Images captured from a smartphone. (c) A reconstructed mesh. (d) A novel view of textured

mesh. Our proposed method can reconstruct the highquality geometric mesh with a visually realistic

texture.

The authors propose a pipeline to capture textured meshes using a smartphone by incorporating structure

from motion and neural implicit surface reconstruction. The method allows for high-quality mesh

reconstruction with improved texture synthesis and does not require in-lab environments or accurate masks.

Structural Multiplane Image: Bridging Neural View Synthesis and 3D Reconstruction

Mingfang Zhang, Jinglu Wang, Xiao Li, Yifei Huang, Yoichi Sato, Yan Lu Figure 1. We propose the Structural Multiplane Image (S-MPI) representation to bridge the tasks of

neural view synthesis and 3D reconstruction. It consists of a set of posed RGBα images with geometries

approximating the 3D scene. The scene-adaptive S-MPI overcomes the critical limitations of standard MPI

, e.g., discretization artifacts (D) and repeated textures (R), and achieves a better depth map compared

with the previous planar reconstruction method, PlaneFormer .

Figure 1. We propose the Structural Multiplane Image (S-MPI) representation to bridge the tasks of

neural view synthesis and 3D reconstruction. It consists of a set of posed RGBα images with geometries

approximating the 3D scene. The scene-adaptive S-MPI overcomes the critical limitations of standard MPI

, e.g., discretization artifacts (D) and repeated textures (R), and achieves a better depth map compared

with the previous planar reconstruction method, PlaneFormer .

S-MPI extends MPI by using compact and expressive structures. The authors propose a transformer-based

network to predict S-MPI layers, their masks, poses, and RGBA contexts, and handle non-planar regions in a

special case. Experiments show superior performance over MPI-based methods for view synthesis and planar

reconstruction.

Neural Vector Fields for Implicit Surface Representation and Inference

Edoardo Mello Rella, Ajad Chhatkuli, Ender Konukoglu, Luc Van Gool Figure 1: Vector Field (VF) visualization on 2D surfaces. Each column shows the shape to represent in

black, and a zoomed in sample reconstruction of the surface inside the red box. VF can represent shapes

similar to SDF or binary occupancy, but also open surfaces. As the surface normals are directly encoded,

VF can reconstruct very sharp angles through additional regularization when needed.

Figure 1: Vector Field (VF) visualization on 2D surfaces. Each column shows the shape to represent in

black, and a zoomed in sample reconstruction of the surface inside the red box. VF can represent shapes

similar to SDF or binary occupancy, but also open surfaces. As the surface normals are directly encoded,

VF can reconstruct very sharp angles through additional regularization when needed.

This paper introduces a new 3D implicit field representation called Vector Field (VF) that encodes unit

vectors in 3D space. VF is directed at the closest point on the surface, and encodes surface normal

directly, which has superior accuracy in representing any type of shape, outperforming other standard

methods on several datasets including ShapeNet.

Towards Better Gradient Consistency for Neural Signed Distance Functions via Level Set Alignment

Baorui Ma, Junsheng Zhou, Yu-Shen Liu, Zhizhong Han Figure 2. Visualization of level sets on a cross section. We pursue better gradient consistency in a

field learned from 3D point clouds in (a) and multi-view images in (b). We minimize our level set

alignment loss with NeuralPull in (a) and NeuS in (b), which leads to more accurate SDFs with better

parallelism of level sets and less artifacts in empty space.

Figure 2. Visualization of level sets on a cross section. We pursue better gradient consistency in a

field learned from 3D point clouds in (a) and multi-view images in (b). We minimize our level set

alignment loss with NeuralPull in (a) and NeuS in (b), which leads to more accurate SDFs with better

parallelism of level sets and less artifacts in empty space.

The parallelism of level sets is the key factor affecting inference accuracy of neural SDFs. Toward Better

Gradient Consistency for Neural Signed Distance Functions from Point Clouds or Multi-View Images improves

accuracy using an adaptive alignment loss and constraining gradients at queries and their projections.

WIRE: Wavelet Implicit Neural Representations

Vishwanath Saragadam, Daniel LeJeune, Jasper Tan, Guha Balakrishnan, Ashok Veeraraghavan, Richard G. Baraniuk Figure 1. Wavelet implicit neural representation (WIRE). We propose a new nonlinearity for implicit

neural representations (INRs) based on the continuous complex Gabor wavelet that has high representation

capacity for visual signals. The top row visualizes two commonly used nonlinearities: SIREN with

sinusoidal nonlinearity and Gaussian nonlinearity, and WIRE that uses a continuous complex Gabor

wavelet. WIRE benefits from the frequency compactness of sine, and spatial compactness of a Gaussian

nonlinearity. The bottom row shows error maps for approximating an image with strong edges. SIREN

results in global ringing artifacts while Gaussian nonlinearity leads to compact but large error at

edges. WIRE produces results with the smallest and most spatially compact error. This enables WIRE to

learn representations rapidly and accurately, while being robust to noise and undersampling of data.

Figure 1. Wavelet implicit neural representation (WIRE). We propose a new nonlinearity for implicit

neural representations (INRs) based on the continuous complex Gabor wavelet that has high representation

capacity for visual signals. The top row visualizes two commonly used nonlinearities: SIREN with

sinusoidal nonlinearity and Gaussian nonlinearity, and WIRE that uses a continuous complex Gabor

wavelet. WIRE benefits from the frequency compactness of sine, and spatial compactness of a Gaussian

nonlinearity. The bottom row shows error maps for approximating an image with strong edges. SIREN

results in global ringing artifacts while Gaussian nonlinearity leads to compact but large error at

edges. WIRE produces results with the smallest and most spatially compact error. This enables WIRE to

learn representations rapidly and accurately, while being robust to noise and undersampling of data.

WIRE uses complex Gabor wavelets which favorably combine good properties for image processing tasks that

use implicit neural representation such as image denoising, image inpainting, and super-resolution. The

authors argue that WIRE outperforms current state-of-the-art INRs in terms of both accuracy and

robustness.

MixNeRF: Modeling a Ray with Mixture Density for Novel View Synthesis from Sparse Inputs

Seunghyeon Seo, Donghoon Han, Yeonjin Chang, Nojun Kwak Figure 1. Comparison with the vanilla mip-NeRF and other regularization methods. Given the same number

of training batch and iterations, our MixNeRF outperforms mip-NeRF and DietNeRF by a large margin with

comparable or shorter training time. Compared to RegNeRF , ours achieves superior performance with about

42% shortened training time. The size of the circles are proportional to the number of input views,

indicating 3/6/9-view, respectively. More details are provided in Sec. 4.2.

Figure 1. Comparison with the vanilla mip-NeRF and other regularization methods. Given the same number

of training batch and iterations, our MixNeRF outperforms mip-NeRF and DietNeRF by a large margin with

comparable or shorter training time. Compared to RegNeRF , ours achieves superior performance with about

42% shortened training time. The size of the circles are proportional to the number of input views,

indicating 3/6/9-view, respectively. More details are provided in Sec. 4.2.

MixNeRF improves the training efficiency of novel-view synthesis by modeling a ray with a mixture density

model. The method also introduces a new task of ray depth estimation as a training objective and

regenerates blending weights to improve robustness.

Looking Through the Glass: Neural Surface Reconstruction Against High Specular Reflections

Jiaxiong Qiu, Peng-Tao Jiang, Yifan Zhu, Ze-Xin Yin, Ming-Ming Cheng, Bo Ren Figure 1. 3D object surface reconstruction under high specular reflections (HSR). Top: A real-world scene

captured by a mobile phone. Middle: The state-of-the-art method NeuS fails to reconstruct the target

object (i.e., the Buddha). Bottom: We propose NeuS-HSR, which recovers a more accurate target object

surface than NeuS.

Figure 1. 3D object surface reconstruction under high specular reflections (HSR). Top: A real-world scene

captured by a mobile phone. Middle: The state-of-the-art method NeuS fails to reconstruct the target

object (i.e., the Buddha). Bottom: We propose NeuS-HSR, which recovers a more accurate target object

surface than NeuS.

While implicit neural rendering provides high-quality 3D object surfaces, high specular reflections in

scenes through glass cause ambiguities that challenge their reconstruction. NeuS-HSR combats this

challenge by decomposing the rendered image into two appearances: the object and auxiliary plane. The

auxiliary plane appearance is generated by a novel auxiliary plane module that integrates physics

assumptions with neural networks.

NeuralUDF: Learning Unsigned Distance Fields for Multi-view Reconstruction of Surfaces with Arbitrary Topologies

Xiaoxiao Long, Cheng Lin, Lingjie Liu, Yuan Liu, Peng Wang, Christian Theobalt, Taku Komura, Wenping Wang Figure 1. We show three groups of multi-view reconstruction results generated by our proposed NeuralUDF

and NeuS respectively. Our method is able to faithfully reconstruct the highquality geometries for both

the closed and open surfaces, while NeuS can only model shapes as closed surfaces, thus leading to

inconsistent typologies and erroneous geometries.

Figure 1. We show three groups of multi-view reconstruction results generated by our proposed NeuralUDF

and NeuS respectively. Our method is able to faithfully reconstruct the highquality geometries for both

the closed and open surfaces, while NeuS can only model shapes as closed surfaces, thus leading to

inconsistent typologies and erroneous geometries.

NeuralUDF proposes utilizing an unsigned distance function representation for surfaces as opposed to a

signed distance function representation. A volume rendering scheme is introduced to learn the neural UDF

representation, allowing for high-quality reconstruction of non-closed shapes with complex topologies.

Sphere-Guided Training of Neural Implicit Surfaces

Andreea Dogaru, Andrei Timotei Ardelean, Savva Ignatyev, Egor Zakharov, Evgeny Burnaev Figure 1. We propose a new hybrid approach for learning neural implicit surfaces from multi-view images.

In previous methods, the volumetric ray marching training procedure is applied for the whole bounding

sphere of the scene (middle left). Instead, we train a coarse sphere-based surface reconstruction

(middle right) alongside the neural surface to guide the ray sampling and ray marching. As a result, our

method achieves an increased sampling efficiency by pruning empty scene space and better quality of

reconstructions (right).

Figure 1. We propose a new hybrid approach for learning neural implicit surfaces from multi-view images.

In previous methods, the volumetric ray marching training procedure is applied for the whole bounding

sphere of the scene (middle left). Instead, we train a coarse sphere-based surface reconstruction

(middle right) alongside the neural surface to guide the ray sampling and ray marching. As a result, our

method achieves an increased sampling efficiency by pruning empty scene space and better quality of

reconstructions (right).

SphereGuided is a method that makes use of a coarse sphere-based surface representation to increase

efficiency of ray marching in neural distance functions. The sphere-based representation is trained in

joint fashion with the Neural Distance Function. The joint representation leads to improved accuracy for

high-frequency details without requiring additional forward passes.

VDN-NeRF: Resolving Shape-Radiance Ambiguity via View-Dependence Normalization

Bingfan Zhu, Yanchao Yang, Xulong Wang, Youyi Zheng, Leonidas Guibas Figure 2. (a): When the geometry is correct, observations (the projections on the 2D image plane

represented by squares) of the same surface point from different viewpoints are similar; (b): When the

geometry is incorrect, i.e., a cube is reconstructed as a sphere, the radiance of a surface point (dot

on the sphere) can exhibit large directional view-dependence. However, as long as the viewdependent

radiance function has enough capacity (c in Eq. (1)), volume rendering of the wrong geometry can still

achieve small photometric reconstruction error. Thus, one should constrain the view-dependent capacity

of the radiance function to avoid overfitting; (c): On the other hand, when the surface is non-Lambertian

or the light field is unstable, one should not over-constrain the viewdependent capacity; otherwise, the

geometry may be traded for photometric reconstruction quality.

Figure 2. (a): When the geometry is correct, observations (the projections on the 2D image plane

represented by squares) of the same surface point from different viewpoints are similar; (b): When the

geometry is incorrect, i.e., a cube is reconstructed as a sphere, the radiance of a surface point (dot

on the sphere) can exhibit large directional view-dependence. However, as long as the viewdependent

radiance function has enough capacity (c in Eq. (1)), volume rendering of the wrong geometry can still

achieve small photometric reconstruction error. Thus, one should constrain the view-dependent capacity

of the radiance function to avoid overfitting; (c): On the other hand, when the surface is non-Lambertian

or the light field is unstable, one should not over-constrain the viewdependent capacity; otherwise, the

geometry may be traded for photometric reconstruction quality.

VDN-NeRF is an approach that normalizes the view-dependence of Neural Radiance Fields without explicitly

modeling the underlying factors. The method distills invariant information already present in the learned

NeRFs and applies it during joint training for view synthesis. This method improves geometry for

non-Lambertian surfaces and dynamic lighting without changing the volume rendering pipeline.

NeAT: Learning Neural Implicit Surfaces with Arbitrary Topologies from Multi-view Images

Xiaoxu Meng, Weikai Chen, Bo Yang Figure 1. We show three groups of surface reconstruction from multi-view images. The front and back

faces are rendered in blue and yellow respectively. Our method (left) is able to reconstruct

high-fidelity and intricate surfaces of arbitrary topologies, including those non-watertight structures,

e.g. the thin single-layer shoulder strap of the top (middle). In comparison, the state-of-the-art NeuS

method (right) can only generate watertight surfaces, resulting in thick, double-layer geometries.

Figure 1. We show three groups of surface reconstruction from multi-view images. The front and back

faces are rendered in blue and yellow respectively. Our method (left) is able to reconstruct

high-fidelity and intricate surfaces of arbitrary topologies, including those non-watertight structures,

e.g. the thin single-layer shoulder strap of the top (middle). In comparison, the state-of-the-art NeuS

method (right) can only generate watertight surfaces, resulting in thick, double-layer geometries.

NeAT proposes learning arbitrary surface topologies from multi-view images in a neural rendering

framework. The signed distance function is used to represent the 3D surface with a branch that estimates

surface existence probability. NeAT also includes neural volume rendering and performs well in

reconstructing both watertight and non-watertight surfaces.

SurfelNeRF: Neural Surfel Radiance Fields for Online Photorealistic Reconstruction of Indoor Scenes

Yiming Gao, Yan-Pei Cao, Ying Shan Figure 1. Examples to illustrate the task of online photorealistic reconstruction of an indoor scene.

The online photorealistic reconstruction of large-scale indoor scenes: given an online input image

stream of a previously unseen scene, the goal is to progressively build and update a scene

representation that allows for highquality rendering from novel views.

Figure 1. Examples to illustrate the task of online photorealistic reconstruction of an indoor scene.

The online photorealistic reconstruction of large-scale indoor scenes: given an online input image

stream of a previously unseen scene, the goal is to progressively build and update a scene

representation that allows for highquality rendering from novel views.

SurfelNeRF combines a neural radiance field with a surfel-based representation to achieve efficient online

reconstruction and high-quality rendering of large-scale indoor scenes. The method employs a flexible

neural network to store geometric attributes and extracted appearance features from input images. Results

show that SurfelNeRF outperforms state-of-the-art methods in both feedforward inference and per-scene

optimization settings.

ShadowNeuS: Neural SDF Reconstruction by Shadow Ray Supervision

Jingwang Ling, Zhibo Wang, Feng Xu Figure 1. Our method can reconstruct neural scenes from singleview images captured under multiple

lightings by effectively leveraging a novel shadow ray supervision scheme.

Figure 1. Our method can reconstruct neural scenes from singleview images captured under multiple

lightings by effectively leveraging a novel shadow ray supervision scheme.

ShadowNeuS introduces shadow ray supervision to NeRF and shows improvements in single-view shape

reconstruction from binary shadow as well as RGB images. The code and data are available at

'https://github.com/gerwang/ShadowNeuS'.

Nerflets: Local Radiance Fields for Efficient Structure-Aware 3D Scene Representation from 2D Supervision

Xiaoshuai Zhang, Abhijit Kundu, Thomas Funkhouser, Leonidas Guibas, Hao Su, Kyle Genova Figure 1. We propose to represent the scene with a set of local neural radiance fields, named nerflets,

which are trained with only 2D supervision. Our representation is not only useful for 2D tasks such as

novel view synthesis and panoptic segmentation, but also capable of solving 3D-oriented tasks such as 3D

segmentation and scene editing. The key idea is our learned structured decomposition (top right).

Figure 1. We propose to represent the scene with a set of local neural radiance fields, named nerflets,

which are trained with only 2D supervision. Our representation is not only useful for 2D tasks such as

novel view synthesis and panoptic segmentation, but also capable of solving 3D-oriented tasks such as 3D

segmentation and scene editing. The key idea is our learned structured decomposition (top right).

Nerflets are a newly proposed set of local neural radiance fields that perform efficient and

structure-aware 3D scene representation from images. Each nerflet contributes to panoptic, density, and

radiance reconstruction, optimized jointly to represent decomposed instances of the scene. Nerflets enable

the extraction of panoptic and photometric renderings from any view and enable tasks such as 3D panoptic

segmentation and interactive editing.

Regularize implicit neural representation by itself

Zhemin Li, Hongxia Wang, Deyu Meng Figure 1. Overview of proposed improve scheme for INR. (a) INR is a fully connected neural network which

maps from coordinate to pixel value. (b) INRR is a regularization term represented by an INR which can

capture the self-similarity. (c) INR-Z improve the performance of INR by combining the neighbor pixels

with coordinate together as the input of another INR.

Figure 1. Overview of proposed improve scheme for INR. (a) INR is a fully connected neural network which

maps from coordinate to pixel value. (b) INRR is a regularization term represented by an INR which can

capture the self-similarity. (c) INR-Z improve the performance of INR by combining the neighbor pixels

with coordinate together as the input of another INR.

INRR uses a learned Dirichlet Energy regularizer to improve the generalization ability of Implicit Neural

Representations (INR), particularly for non-uniformly sampled data. Its effectiveness is demonstrated

through numerical experiments and it can also improve other signal representation methods.

RobustNeRF: Ignoring Distractors with Robust Losses

Sara Sabour, Suhani Vora, Daniel Duckworth, Ivan Krasin, David J. Fleet, Andrea Tagliasacchi Figure 1. NeRF assumes photometric consistency in the observed images of a scene. Violations of this

assumption, as with the images in the top row, yield reconstructed scenes with inconsistent content in

the form of “floaters” (highlighted with ellipses). We introduce a simple technique that produces clean

reconstruction by automatically ignoring distractors without explicit supervision.

Figure 1. NeRF assumes photometric consistency in the observed images of a scene. Violations of this

assumption, as with the images in the top row, yield reconstructed scenes with inconsistent content in

the form of “floaters” (highlighted with ellipses). We introduce a simple technique that produces clean

reconstruction by automatically ignoring distractors without explicit supervision.

Robust NeRF proposes a solution for removing distractors (moving objects, lighting variations, shadows)

from static NeRF scenes during training. The method models the distractors as optimization outliers and

improves NeRF performance on synthetic and real-world datasets.

Multi-View Reconstruction using Signed Ray Distance Functions (SRDF)

Pierre Zins, Yuanlu Xu, Edmond Boyer, Stefanie Wuhrer, Tony Tung Figure 1. Reconstructions with various methods using 14 images of a model from BlendedMVS .

Figure 1. Reconstructions with various methods using 14 images of a model from BlendedMVS .

The authors propose a new optimization framework that combines volumetric integration with local depth

prediction in multi-view 3D shape reconstructions. Their approach demonstrates better geometry estimations

over existing methods, achieving pixel-accuracy while retaining the benefits of volumetric integration.

Self-supervised Super-plane for Neural 3D Reconstruction

Botao Ye, Sifei Liu, Xueting Li, Ming-Hsuan Yang Figure 1. Reconstruction and Plane Segmentation Results. Our method can reconstruct smooth and complete

planar regions by employing the super-plane constraint and further obtain plane segmentation in an

unsupervised manner.

Figure 1. Reconstruction and Plane Segmentation Results. Our method can reconstruct smooth and complete

planar regions by employing the super-plane constraint and further obtain plane segmentation in an

unsupervised manner.

S3PRecon introduces a self-supervised super-plane constraint for neural implicit surface representation

methods. The method improves the reconstruction of texture-less planar regions in indoor scenes without

requiring any other ground truth annotations. The use of super-planes proves to be more effective than

traditional annotated planes.

PET-NeuS: Positional Encoding Tri-Planes for Neural Surfaces

Yiqun Wang, Ivan Skorokhodov, Peter Wonka Figure 1. The challenge of using the tri-plane representation directly. First column: reference image.

Second to the fifth column: NeuS, Learning SDF using tri-planes, OURS without self-attention convolution,

and OURS.

Figure 1. The challenge of using the tri-plane representation directly. First column: reference image.

Second to the fifth column: NeuS, Learning SDF using tri-planes, OURS without self-attention convolution,

and OURS.

PET-NeuS extends NeuS with positional encoding, learnable convolution, and tri-planes as a mixture of MLP

and tri-planes. These improvements achieve high-fidelity reconstruction on standard datasets.

RefSR-NeRF: Towards High Fidelity and Super Resolution View Synthesis

Xudong Huang, Wei Li, Jie Hu, Hanting Chen, Yunhe Wang Figure 2. An overview of the reference-based SR model, which consists of a two-branch backbone and a

fusion module. The backbones extract the feature maps from degradation-dominant (LRRef and novel view

LR) and detail-dominant information (HR Ref and novel view LR) respectively. The two feature maps are

then fused and further refined by a fusion module.

Figure 2. An overview of the reference-based SR model, which consists of a two-branch backbone and a

fusion module. The backbones extract the feature maps from degradation-dominant (LRRef and novel view

LR) and detail-dominant information (HR Ref and novel view LR) respectively. The two feature maps are

then fused and further refined by a fusion module.

RefSR-NeRF is an end-to-end framework that reconstructs high frequency details of NeRF rendering that are

lost due to resolution explosion, by first generating a low-resolution NeRF model and then using a

high-resolution reference image to reconstruct the high frequency details. The proposed RefSR model learns

the inverse degradation process from NeRF to the target high-resolution image and outperforms NeRF and its

variants in terms of speed, memory usage, and rendering quality.

DynIBaR: Neural Dynamic Image-Based Rendering

Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, Noah Snavely Figure 1. Recent methods for synthesizing novel views from monocular videos of dynamic scenes–like

HyperNeRF – struggle to render high-quality views from long videos featuring complex camera and scene

motion. We present a new approach that addresses these limitations, illustrated above via an application

to 6DoF video stabilization, where we apply our approach and prior methods on a 30-second, shaky video

clip, and compare novel views rendered along a smoothed camera path (left). On a dynamic scenes dataset

(right) , our approach significantly improves rendering fidelity, as indicated by synthesized images and

LPIPS errors computed on pixels corresponding to moving objects (yellow numbers). Please see the

supplementary video for full results.

Figure 1. Recent methods for synthesizing novel views from monocular videos of dynamic scenes–like

HyperNeRF – struggle to render high-quality views from long videos featuring complex camera and scene

motion. We present a new approach that addresses these limitations, illustrated above via an application

to 6DoF video stabilization, where we apply our approach and prior methods on a 30-second, shaky video

clip, and compare novel views rendered along a smoothed camera path (left). On a dynamic scenes dataset

(right) , our approach significantly improves rendering fidelity, as indicated by synthesized images and

LPIPS errors computed on pixels corresponding to moving objects (yellow numbers). Please see the

supplementary video for full results.

DynIBAR synthesizes novel views from a video with a volumetric image-based rendering approach, which

synthesizes new viewpoints by aggregating features from nearby views in a scene-motion-aware manner. The

method allows for photo-realistic novel view synthesis from long videos featuring complex scene dynamics.

F2-NeRF: Fast Neural Radiance Field Training with Free Camera Trajectories

Peng Wang, Yuan Liu, Zhaoxi Chen, Lingjie Liu, Ziwei Liu, Taku Komura, Christian Theobalt, Wenping Wang Figure 1. Top: (a) Forward-facing camera trajectory. (b) 360◦

Figure 1. Top: (a) Forward-facing camera trajectory. (b) 360◦

F^2-NeRF is introduced as a novel fast and free grid-based NeRF for novel view synthesis, called

Fast-Free-NeRF. F^2-NeRF proposes a new perspective warping approach that enables handling of arbitrary

camera trajectories to handle both bounded and unbounded scenes. The proposed method is fast and can still

obtain high performance with a few minutes of training.

NeuDA: Neural Deformable Anchor for High-Fidelity Implicit Surface Reconstruction

Bowen Cai, Jinchi Huang, Rongfei Jia, Chengfei Lv, Huan Fu Figure 1. We show the surface reconstruction results produced by NeuDA and the two baseline methods,

including NeuS and Intsnt-NeuS . Intsnt-NeuS is the reproduced NeuS leveraging the multi-resolution hash

encoding technique . We can see NeuDA can promisingly preserve more surface details. Please refer to

Figure 5 for more qualitative comparisons.

Figure 1. We show the surface reconstruction results produced by NeuDA and the two baseline methods,

including NeuS and Intsnt-NeuS . Intsnt-NeuS is the reproduced NeuS leveraging the multi-resolution hash

encoding technique . We can see NeuDA can promisingly preserve more surface details. Please refer to

Figure 5 for more qualitative comparisons.

NeuDA utilizes hierarchical anchor grids to improve surface reconstruction. These anchor grids encode both

high- and low-frequency geometry and appearance with a simple hierarchical positional encoding method. The

model shows substantial improvement on reconstruction over prior work.

PlenVDB: Memory Efficient VDB-Based Radiance Fields for Fast Training and Rendering

Han Yan, Celong Liu, Chao Ma, Xing Mei Figure 1. We propose PlenVDB, a sparse volume data structure for accelerating NeRF training and

rendering. Given a set of training views, our method directly optimizes a VDB model. Then a novel view

can be rendered with the model. Two advantages, i.e., fast voxel access for faster speed, and efficient

storage for smaller model size, enable efficient NeRF rendering on mobile devices.

Figure 1. We propose PlenVDB, a sparse volume data structure for accelerating NeRF training and

rendering. Given a set of training views, our method directly optimizes a VDB model. Then a novel view

can be rendered with the model. Two advantages, i.e., fast voxel access for faster speed, and efficient

storage for smaller model size, enable efficient NeRF rendering on mobile devices.

PlenVDB accelerates training and inference in neural radiance fields by using the VDB data structure.

PlenVDB is shown to be more efficient in training, data representation, and rendering than previous

methods and can achieve high FPS on mobile devices.

SeaThru-NeRF: Neural Radiance Fields in Scattering Media

Deborah Levy, Amit Peleg, Naama Pearl, Dan Rosenbaum, Derya Akkaynak, Simon Korman, Tali Treibitz Figure 1. NeRFs have not yet tackled scenes in which the medium strongly influences the appearances of

objects, as in the case of underwater imagery. By incorporating a scattering image formation model into

the NeRF rendering equations, we are able to separate the scene into ‘clean’ and backscatter components.

Consequently, we can render photorealistic novel-views with or without the participating medium, in the

latter case recovering colors as if the image was taken in clear air. Results on the Curac¸ao scene: A

RAW image (left) is brightened and white balanced (WB) for visualization, showing more detail, while

areas further from the camera (top-right corner) are occluded and attenuated by severe backscatter which

is effectively removed in our restored image. Please zoom-in to observe the details.

Figure 1. NeRFs have not yet tackled scenes in which the medium strongly influences the appearances of

objects, as in the case of underwater imagery. By incorporating a scattering image formation model into

the NeRF rendering equations, we are able to separate the scene into ‘clean’ and backscatter components.

Consequently, we can render photorealistic novel-views with or without the participating medium, in the

latter case recovering colors as if the image was taken in clear air. Results on the Curac¸ao scene: A

RAW image (left) is brightened and white balanced (WB) for visualization, showing more detail, while

areas further from the camera (top-right corner) are occluded and attenuated by severe backscatter which

is effectively removed in our restored image. Please zoom-in to observe the details.

The authors introduce SeaThru-NeRF for rendering scenes in scattering media such as underwater or foggy

scenes. The X-NeRF architecture also allows for the medium's parameters to be learnt from data along with

the scene.

DINER: Depth-aware Image-based NEural Radiance fields

Malte Prinzler, Otmar Hilliges, Justus Thies Figure 1. Based on sparse input views, we predict depth and feature maps to infer a volumetric scene

representation in terms of a radiance field which enables novel viewpoint synthesis. The depth

information allows us to use input views with high relative distance such that the scene can be captured

more completely and with higher synthesis quality compared to previous state-of-the-art methods.

Figure 1. Based on sparse input views, we predict depth and feature maps to infer a volumetric scene

representation in terms of a radiance field which enables novel viewpoint synthesis. The depth

information allows us to use input views with high relative distance such that the scene can be captured

more completely and with higher synthesis quality compared to previous state-of-the-art methods.

DINER uses depth and feature maps to guide volumetric scene representation for 3D rendering of novel

views. The method incorporates depth information in feature fusion and uses efficient scene sampling,

resulting in higher synthesis quality even for input views with high disparity.

Masked Wavelet Representation for Compact Neural Radiance Fields

Daniel Rho, Byeonghyeon Lee, Seungtae Nam, Joo Chan Lee, Jong Hwan Ko, Eunbyung Park Figure 1. Rate-distortion curves on the NeRF synthetic dataset. The numbers inside parenthesis denote

the axis resolution of grids.

Figure 1. Rate-distortion curves on the NeRF synthetic dataset. The numbers inside parenthesis denote

the axis resolution of grids.

The authors propose a new method for representing wavelet-transformed grid-based neural fields in a more

memory-efficient way. They achieved state-of-the-art compression results while still maintaining

high-quality reconstruction.

Exact-NeRF: An Exploration of a Precise Volumetric Parameterization for Neural Radiance Fields

Brian K. S. Isaac-Medina, Chris G. Willcocks, Toby P. Breckon Figure 1. Comparison of Exact-NeRF (ours) with mip-NeRF 360 . Our method is able to both match the

performance and obtain superior depth estimation over a larger depth of field.

Figure 1. Comparison of Exact-NeRF (ours) with mip-NeRF 360 . Our method is able to both match the

performance and obtain superior depth estimation over a larger depth of field.

Exact-NeRF improves mip-NeRF, which approximates the Integrated Positional Encoding (IPE) using an

expected value of a multivariate Gaussian. Exact-NeRF instead uses a pyramid-based integral formulation to

calculate the IPE exactly, leading to improved accuracy and a natural extension in the case of unbounded

scenes.

NeRFLiX: High-Quality Neural View Synthesis by Learning a Degradation-Driven Inter-viewpoint MiXer

Kun Zhou, Wenbo Li, Yi Wang, Tao Hu, Nianjuan Jiang, Xiaoguang Han, Jiangbo Lu Figure 1. We propose NeRFLiX, a general NeRF-agnostic restorer that is capable of improving neural view

synthesis quality. The first example is from Tanks and Temples , and the last one is a user scene

captured by a mobile phone. RegNeRF-V3 means the model trained with three input views.

Figure 1. We propose NeRFLiX, a general NeRF-agnostic restorer that is capable of improving neural view

synthesis quality. The first example is from Tanks and Temples , and the last one is a user scene

captured by a mobile phone. RegNeRF-V3 means the model trained with three input views.

NeRFLiX restores realistic details in synthesized images by leveraging aggregation: it can effectively

remove artifacts with a degradation-driven mixer of large-scale NeRF training data. Beyond the artifact

removal, the proposed inter-viewpoint aggregation framework improves the performance and photorealism of

existing NeRF models.

NeUDF: Leaning Neural Unsigned Distance Fields with Volume Rendering

Yu-Tao Liu, Li Wang, Jie yang, Weikai Chen, Xiaoxu Meng, Bo Yang, Lin Gao Figure 1. We show comparisons of the input multi-view images (top), watertight surfaces (middle)

reconstructed with state-of-the-art SDF-based volume rendering method NeuS , and open surfaces (bottom)

reconstructed with our method. Our method is capable of reconstructing high-fidelity shapes with both

open and closed surfaces from multi-view images.

Figure 1. We show comparisons of the input multi-view images (top), watertight surfaces (middle)

reconstructed with state-of-the-art SDF-based volume rendering method NeuS , and open surfaces (bottom)

reconstructed with our method. Our method is capable of reconstructing high-fidelity shapes with both

open and closed surfaces from multi-view images.

NeUDF is an implicit neural surface representation that uses the unsigned distance function to enable the

reconstruction of arbitrary surfaces from multiple views. It addresses issues arising due to the volume of

the sigmoid function and the surface orientation ambiguity in open surfaces rendering.

TINC: Tree-structured Implicit Neural Compression

Runzhao Yang, Tingxiong Xiao, Yuxiao Cheng, Jinli Suo, Qionghai Dai Figure 1. The scheme of the proposed approach TINC. For a target data, we divide the volume into

equal-size blocks via octree partitioning, with some neighboring and far-apart blocks of similar

appearances. Then each block can be representation with an implicit neural function, implemented as an

MLP. After sharing parameters among similar blocks, we can achieve a more compact neural network with a

tree shaped structure. Here we highlight the similar blocks sharing network parameters with the same

color.

Figure 1. The scheme of the proposed approach TINC. For a target data, we divide the volume into

equal-size blocks via octree partitioning, with some neighboring and far-apart blocks of similar

appearances. Then each block can be representation with an implicit neural function, implemented as an

MLP. After sharing parameters among similar blocks, we can achieve a more compact neural network with a

tree shaped structure. Here we highlight the similar blocks sharing network parameters with the same

color.

TINC is a new compression technique for implicit neural representation (INR) that uses a tree-structured

MLP to fit local regions and extract shared features. This approach improves the compression of INR and

outperforms competing methods.

ABLE-NeRF: Attention-Based Rendering with Learnable Embeddings for Neural Radiance Field

Zhe Jun Tang, Tat-Jen Cham, Haiyu Zhao Figure 1. We illustrate two views of the Blender ’Drums’ Scene. The surface of the drums exhibit either

a translucent surface or a reflective surface at different angles. As shown, Ref-NeRF model has severe

difficulties interpolating between the translucent and reflective surfaces as the viewing angle changes.

Our method demonstrates its superiority over NeRF rendering models by producing such accurate

view-dependent effects. In addition, the specularity of the cymbals are rendered much closer to ground

truth compared to Ref-NeRF.

Figure 1. We illustrate two views of the Blender ’Drums’ Scene. The surface of the drums exhibit either

a translucent surface or a reflective surface at different angles. As shown, Ref-NeRF model has severe

difficulties interpolating between the translucent and reflective surfaces as the viewing angle changes.

Our method demonstrates its superiority over NeRF rendering models by producing such accurate

view-dependent effects. In addition, the specularity of the cymbals are rendered much closer to ground

truth compared to Ref-NeRF.

ABLE-NeRF is a self-attention-based alternative to traditional volume rendering. It utilizes learnable

embeddings to better represent view-dependent effects, resulting in superior rendering of glossy and

translucent surfaces compared to prior work. ABLE-NeRF outperforms Ref-NeRF on all 3 image quality metrics

in the Blender dataset.

Hybrid Neural Rendering for Large-Scale Scenes with Motion Blur

Peng Dai, Yinda Zhang, Xin Yu, Xiaoyang Lyu, Xiaojuan Qi Figure 1. Our hybrid neural rendering model generates highfidelity novel view images. Please note

characters in the book where the result of Point-Nerf is blurry and the GT is contaminated by blur

artifacts.

Figure 1. Our hybrid neural rendering model generates highfidelity novel view images. Please note

characters in the book where the result of Point-Nerf is blurry and the GT is contaminated by blur

artifacts.

The hybrid rendering model proposed integrates image-based and neural 3D representations to jointly

produce view-consistent novel views of large-scale scenes, handling naturally-occurring artifacts such as

motion blur. The authors also propose to simulate blur effects on rendered images with quality-aware

weights, leading to better results for novel view synthesis than competing point-based methods.

Seeing Through the Glass: Neural 3D Reconstruction of Object Inside a Transparent Container

Jinguang Tong, Sundaram Muthu, Fahira Afzal Maken, Chuong Nguyen, Hongdong Li Figure 2. An overview of the ReNeuS framework. The scene is separated into two sub-spaces w.r.t. the

interface. The internal scene is represented by two multi-layer perceptrons (MLPs) for both geometry and

appearance. For neural rendering, we recursively trace a ray through the scene and collect a list of

sub-rays. Our neural implicit representation makes the tracing process controllable. We show a ray

tracing process with the depth of recursion Dre = 3 here. Color accumulation is conducted on irradiance

of the sub-rays in the inverse direction of ray tracing. We optimized the network by the difference

between rendered image and the ground truth image. Target mesh can be extracted from SDF by Marching

Cubes

Figure 2. An overview of the ReNeuS framework. The scene is separated into two sub-spaces w.r.t. the

interface. The internal scene is represented by two multi-layer perceptrons (MLPs) for both geometry and

appearance. For neural rendering, we recursively trace a ray through the scene and collect a list of

sub-rays. Our neural implicit representation makes the tracing process controllable. We show a ray

tracing process with the depth of recursion Dre = 3 here. Color accumulation is conducted on irradiance

of the sub-rays in the inverse direction of ray tracing. We optimized the network by the difference

between rendered image and the ground truth image. Target mesh can be extracted from SDF by Marching

Cubes

ReNeuS proposes a neural rendering approach for reconstructing the 3D geometry of an object confined in a

transparent enclosure. The method models the scene as two subspaces, one inside the transparent enclosure

and another outside it. ReNeuS also presents a hybrid rendering strategy for complex light interactions

and combines volume rendering with ray tracing.

Real-Time Neural Light Field on Mobile Devices

Junli Cao, Huan Wang, Pavlo Chemerys, Vladislav Shakhrai, Ju Hu, Yun Fu, Denys Makoviichuk, Sergey Tulyakov, Jian Ren Figure 1. Examples of deploying our approach on mobile devices for real-time interaction with users. Due

to the small model size (8.3MB) and fast inference speed (18 ∼ 26ms per image on iPhone 13), we can

build neural rendering applications where users interact with 3D objects on their devices, enabling

various applications such as virtual try-on. We use publicly available software to make the on-device

application for visualization .

Figure 1. Examples of deploying our approach on mobile devices for real-time interaction with users. Due

to the small model size (8.3MB) and fast inference speed (18 ∼ 26ms per image on iPhone 13), we can

build neural rendering applications where users interact with 3D objects on their devices, enabling

various applications such as virtual try-on. We use publicly available software to make the on-device

application for visualization .

The authors introduce a lightweight and mobile-friendly architecture for real-time neural rendering with

NeLF techniques. The proposed network achieves high quality results while being small and efficient,

outperforming MobileNeRF in terms of speed and storage needs.

Priors and Generative

Priors can either aid in the reconstruction or can be used in a generative manner. For example, in the reconstruction, priors either increase the quality of neural view synthesis or enable reconstructions from sparse image collections.Learning 3D-aware Image Synthesis with Unknown Pose Distribution

Zifan Shi, Yujun Shen, Yinghao Xu, Sida Peng, Yiyi Liao, Sheng Guo, Qifeng Chen, Dit-Yan Yeung Figure 2. Framework of PoF3D, which consists of a pose-free generator and a pose-aware discriminator.

The pose-free generator maps a latent code to a neural radiance field as well as a camera pose, followed

by a volume renderer (VR) to output the final image. The pose-aware discriminator first predicts a

camera pose from the given image and then use it as the pseudo label for conditional real/fake

discrimination, indicated by the orange arrow.

Figure 2. Framework of PoF3D, which consists of a pose-free generator and a pose-aware discriminator.

The pose-free generator maps a latent code to a neural radiance field as well as a camera pose, followed

by a volume renderer (VR) to output the final image. The pose-aware discriminator first predicts a

camera pose from the given image and then use it as the pseudo label for conditional real/fake

discrimination, indicated by the orange arrow.

PoF3D is a generative model for 3D image synthesis that needs no prior on the 3D object pose. It first

infers the pose from the latent code using an efficient pose learner. Then, it trains the discriminator to

differentiate between synthesized images with the predicted pose as a condition and real images. Jointly

trained in an adversarial manner, PoF3D generates high-quality 3D-aware image synthesis.

Painting 3D Nature in 2D: View Synthesis of Natural Scenes from a Single Semantic Mask

Shangzhan Zhang, Sida Peng, Tianrun Chen, Linzhan Mou, Haotong Lin, Kaicheng Yu, Yiyi Liao, Xiaowei Zhou Figure 1. Given only a single semantic map as input (first row), our approach optimizes neural fields for

view synthesis of natural scenes. Photorealistic images can be rendered via neural fields (the last two

rows).

Figure 1. Given only a single semantic map as input (first row), our approach optimizes neural fields for

view synthesis of natural scenes. Photorealistic images can be rendered via neural fields (the last two

rows).

The paper presents a method for synthesizing multi-view consistent color images of natural scenes from

single semantic masks, using a semantic field as the intermediate representation. The method outperforms

baseline methods and produces photorealistic, multi-view consistent videos without requiring multi-view

supervision or category-level priors.

Shape, Pose, and Appearance from a Single Image via Bootstrapped Radiance Field Inversion

Dario Pavllo, David Joseph Tan, Marie-Julie Rakotosaona, Federico Tombari Figure 1. Given a collection of 2D images representing a specific category (e.g. cars), we learn a model

that can fully recover shape, pose, and appearance from a single image, without leveraging multiple

views during training. The 3D shape is parameterized as a signed distance function (SDF), which

facilitates its transformation to a triangle mesh for further downstream applications.

Figure 1. Given a collection of 2D images representing a specific category (e.g. cars), we learn a model

that can fully recover shape, pose, and appearance from a single image, without leveraging multiple

views during training. The 3D shape is parameterized as a signed distance function (SDF), which

facilitates its transformation to a triangle mesh for further downstream applications.

An end-to-end hybrid inversion NeRF is introduced to recover 3D shape, pose, and appearance from a single

image. Unlike prior art which made use of multiple views during training, the new approach is capable of

recovering the geometry, pose, and appearance of an object with a single image, and demonstrates

state-of-the-art results on multiple real and artificial rendering tasks.

Local Implicit Ray Function for Generalizable Radiance Field Representation

Xin Huang, Qi Zhang, Ying Feng, Xiaoyu Li, Xuan Wang, Qing Wang Figure 1. We propose LIRF to reconstruct radiance fields of unseen scenes for novel view synthesis.

Given that current generalizable NeRF-like methods cast an infinitesimal ray to render a pixel at

different scales, it causes excessive blurring and aliasing. Our method instead reasons about 3D conical

frustums defined by the neighbor rays through the neighbor pixels (as shown in (a)). Our LIRF outputs

the feature of any sample within the conical frustum in a continuous manner (as shown in (b)), which

supports NeRF reconstruction at arbitrary scales. Compared with the previous method, our method can be

generalized to represent the same unseen scene at multiple levels of details (as shown in (c)).

Specifically, given a set of input views at a consistent image scale ×1, LIRF enables our method to both

preserve sharp details in close-up shots (anti-blurring as shown in ×2 and ×4 results) and correctly

render the zoomed-out images (anti-aliasing as shown in ×0.5 results).

Figure 1. We propose LIRF to reconstruct radiance fields of unseen scenes for novel view synthesis.

Given that current generalizable NeRF-like methods cast an infinitesimal ray to render a pixel at

different scales, it causes excessive blurring and aliasing. Our method instead reasons about 3D conical

frustums defined by the neighbor rays through the neighbor pixels (as shown in (a)). Our LIRF outputs

the feature of any sample within the conical frustum in a continuous manner (as shown in (b)), which

supports NeRF reconstruction at arbitrary scales. Compared with the previous method, our method can be

generalized to represent the same unseen scene at multiple levels of details (as shown in (c)).

Specifically, given a set of input views at a consistent image scale ×1, LIRF enables our method to both

preserve sharp details in close-up shots (anti-blurring as shown in ×2 and ×4 results) and correctly

render the zoomed-out images (anti-aliasing as shown in ×0.5 results).

LIRF is a generalizable neural rendering approach for novel view rendering that aggregates information

from conical frustums to construct a ray. The method outperforms state-of-the-art methods on novel view

rendering of unseen scenes at arbitrary scales.

Multiview Compressive Coding for 3D Reconstruction

Chao-Yuan Wu, Justin Johnson, Jitendra Malik, Christoph Feichtenhofer, Georgia Gkioxari Figure 1. Multiview Compressive Coding (MCC). (a): MCC encodes an input RGB-D image and uses an

attention-based model to predict the occupancy and color of query points to form the final 3D

reconstruction. (b): MCC generalizes to novel objects captured with iPhones (left) or imagined by DALL·E

2 (middle). It is also general – it works not only on objects but also scenes (right).

Figure 1. Multiview Compressive Coding (MCC). (a): MCC encodes an input RGB-D image and uses an

attention-based model to predict the occupancy and color of query points to form the final 3D

reconstruction. (b): MCC generalizes to novel objects captured with iPhones (left) or imagined by DALL·E

2 (middle). It is also general – it works not only on objects but also scenes (right).

MCC is a framework for single-view 3D reconstruction that can learn representations from 3D points of

objects or scenes. Through large-scale training from RGB-D videos, generalization is improved.

Generalizable Implicit Neural Representations via Instance Pattern Composers

Chiheon Kim, Doyup Lee, Saehoon Kim, Minsu Cho, Wook-Shin Han Figure 1. The reconstructed images of 178×178 ImageNette by TransINR (left) and our generalizable INRs

(right).

Figure 1. The reconstructed images of 178×178 ImageNette by TransINR (left) and our generalizable INRs

(right).

The authors propose a generalizable implicit neural representation that can represent complex data

instances by modulating a small set of weights in early MLP layers as an instance pattern composer. The

remaining weights learn composition rules for common representation across instances. The method's

flexibility is shown by testing it on audio, image, and 3D data.

DP-NeRF: Deblurred Neural Radiance Field with Physical Scene Priors

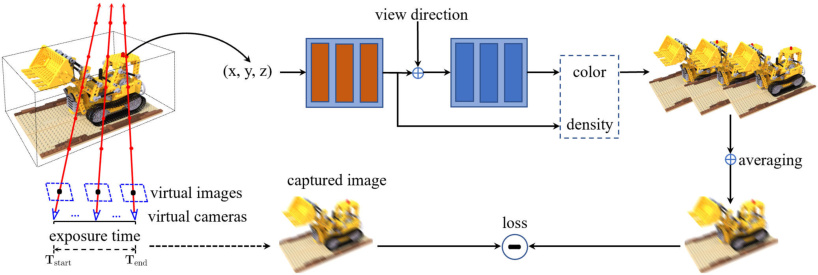

Dogyoon Lee, Minhyeok Lee, Chajin Shin, Sangyoun Lee Figure 2. Overall pipeline for DP-NeRF. DP-NeRF consists of three stages. (a) The rigid blurring kernel

(RBK) constructs the blurring system using the SE(3) Field based on the physical priors. (b) The

adaptive weight proposal (AWP) refines the composition weights using the depth feature (ζp i,j) of the

samples on the ray of the target pixel (p), the scene (s) information, and the rigidly transformed ray

directions (rp s;0,...,k). (c) Finally the coarse and fine blurred colors, ˆBp and ˜Bp, are composited

using the weighted sum of the ray transformed colors. Lc and Lf denote the coarse and fine RGB

reconstruction loss, respectively.

Figure 2. Overall pipeline for DP-NeRF. DP-NeRF consists of three stages. (a) The rigid blurring kernel

(RBK) constructs the blurring system using the SE(3) Field based on the physical priors. (b) The

adaptive weight proposal (AWP) refines the composition weights using the depth feature (ζp i,j) of the

samples on the ray of the target pixel (p), the scene (s) information, and the rigidly transformed ray

directions (rp s;0,...,k). (c) Finally the coarse and fine blurred colors, ˆBp and ˜Bp, are composited

using the weighted sum of the ray transformed colors. Lc and Lf denote the coarse and fine RGB

reconstruction loss, respectively.

DP-NeRF is an adaptation of the NeRF model to handle blurred images with physical priors, providing better

geometric and appearance consistency. Specifically, it introduces a rigid blurring kernel and adaptive

weight proposal to refine the color composition error while taking into consideration the relationship

between depth and blur.

DIFu: Depth-Guided Implicit Function for Clothed Human Reconstruction

Dae-Young Song, HeeKyung Lee, Jeongil Seo, Donghyeon Cho Figure 2. An Overview of our method. (a) First, the hallucinator generates a back-side image IB using IF

. The depth estimator receives IF and IB and estimates a front depth map DF and a back depth map DB

simultaneously. (b) With IF , IB, DF , and DB, the 2D encoder extracts a feature map to be transformed

into the pixel-aligned feature. Meanwhile, DF and DB are projected to form a depth volume V . A 3D

feature map is extracted by the 3D encoder from V , and aligned to the voxel-aligned feature. Both

aligned features are concatenated in the channel axis and used by MLPs to estimate the final occupancy

vector for given query points.

Figure 2. An Overview of our method. (a) First, the hallucinator generates a back-side image IB using IF

. The depth estimator receives IF and IB and estimates a front depth map DF and a back depth map DB

simultaneously. (b) With IF , IB, DF , and DB, the 2D encoder extracts a feature map to be transformed

into the pixel-aligned feature. Meanwhile, DF and DB are projected to form a depth volume V . A 3D

feature map is extracted by the 3D encoder from V , and aligned to the voxel-aligned feature. Both

aligned features are concatenated in the channel axis and used by MLPs to estimate the final occupancy

vector for given query points.

DIFu is an IF-based method for reconstructing clothed human from a single image. It proposes new encoders

and networks to extract features from both 2D and 3D space, utilizing a depth prior to generate a 3D

representation of a human body in addition to an RGB image. The method also estimates colors of 3D points

using a texture inference branch.

HumanGen: Generating Human Radiance Fields with Explicit Priors